On Wednesday 14 June 2023, the European Parliament adopted the Artificial Intelligence Act (“AI Act”), a regulation regulating the development and use of artificial intelligence (AI) within the European Union

On Wednesday 14 June 2023, the European Parliament adopted the Artificial Intelligence Act (“AI Act“), a regulation regulating the development and use of artificial intelligence (AI) within the European Union. The text, which is said to hold the record for legislative amendments, is now being discussed by the Member States in the Council. The aim is to reach an agreement by the end of the year.

While the date on which the AI Act will come into force remains uncertain, companies involved in the AI sector have every interest in anticipating this future regulation.

What are the main measures?

Objectives

The regulation harmonises Member States’ legislation on AI systems, thereby providing legal certainty that is conducive to innovation and investment in this field. The text is intended to be protective but balanced, so as not to hinder the development of the innovation needed to meet the challenges of the future (the fight against climate change, the environment, health).

Like the General Data Protection Regulation (GDPR), which follows the same logic throughout its articles, the AI Act sets itself up as a global benchmark.

The scope of application is deliberately broad in order to avoid any circumvention of the regulations. It applies both to AI suppliers (who develop or have developed an AI system with a view to placing it on the market or putting it into service under their own name or brand) and to users (who use an AI system under their own authority, except where the system is used in the context of a personal non-professional activity).

In practical terms, it applies to :

- suppliers, established in the EU or in a third country, who place AI systems on the market or put them into service in the EU;

- users of AI systems located in the EU;

- suppliers and users of AI systems located in a third country, where the results generated by the system are used in the EU.

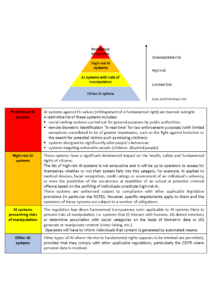

A risk-based approach

Artificial intelligence is defined as the ability to generate results such as content, predictions, recommendations or decisions that influence the environment with which the system interacts, whether in a physical or digital dimension. The regulation adopts a risk-based approach and introduces a distinction between uses of AI that create an unacceptable risk, a high risk and a low or minimal risk:

Regarding high-risk AI systems:

The following minimum requirements must be met:

- Establish a risk management system: this system consists of a continuous iterative process which takes place throughout the life cycle of a high-risk AI system and which must be periodically and methodically updated.

- Ensuring the quality of the datasets: the training, validation and test datasets will have to meet quality criteria and in particular be relevant, representative, error-free and complete. In particular, the aim is to avoid “algorithmic discrimination”.

- Formalise technical documentation: technical documentation containing all the information needed to assess the conformity of a high-risk AI system must be drawn up and kept up to date before the system is placed on the market or put into service.

- Providing for traceability: the design and development of high-risk AI systems should include features for automatic recording of events (“logs”) during the operation of these systems.

- Provide transparent information: high-risk AI systems will be accompanied by a user manual containing information on the characteristics of the AI (identity and contact details of the supplier, characteristics, capabilities and performance limits of the AI system, human control measures, etc.) that is accessible and understandable to users.

- Provide for human control: effective control by natural persons must be provided for during the period of use of the AI system.

- Ensuring system security: the design and development of high-risk AI systems will have to achieve an appropriate level of accuracy, robustness and cybersecurity, and operate consistently in this respect throughout their lifecycle.

All players in the supply chain – suppliers, importers and distributors alike – are subject to these obligations, so everyone will have to assume their responsibilities and be even more vigilant.

In particular, suppliers must:

- demonstrate compliance with the above minimum requirements by maintaining technical documentation;

- subject their AI systems to a conformity assessment procedure before they are placed on the market or put into service;

- take the necessary corrective measures to bring the AI system into compliance, withdraw it or recall it;

- cooperate with national authorities

- onotify serious incidents and malfunctions involving a high-risk AI placed on the market to the supervisory authorities of the Member State where the incident occurred no later than 15 days after the supplier becomes aware of the serious incident or malfunction.

It should be noted that these obligations also apply to the manufacturer of a product that incorporates a high-risk AI system.

- The importer of a high-risk AI system will have to ensure that the supplier of this AI system has followed the appropriate conformity assessment procedure, that the technical documentation is established and that the system bears the required conformity marking and is accompanied by the required documentation and instructions for use.

- Distributors will also have to check that the high-risk AI system they intend to place on the market bears the required CE conformity marking, that it is accompanied by the required documentation and instructions for use, and that the supplier and importer of the system, as the case may be, have complied with their obligations.

Enforcement and governance

At national level, the Member States will have to designate one or more competent national authorities, including the national supervisory authority responsible for monitoring the application and implementation of the Regulation.

A European Artificial Intelligence Committee (made up of the national supervisory authorities) will be set up to provide advice and assistance to the European Commission, in particular on the consistent application of the Regulation within the EU. Notified bodies will carry out the conformity assessment of AI systems. Notified bodies should be designated by the competent national authorities, provided that they comply with a set of requirements relating in particular to their independence, competence and absence of conflicts of interest.

Support SMEs and start-ups through the establishment of AI regulatory sandboxes and other measures to reduce the regulatory burden.

Regulatory AI sandboxes will provide a controlled environment to facilitate the development, testing and validation of innovative AI systems for a limited time before they are brought to market or commissioned according to a specific plan.

Penalties

The AI Act provides for three penalty ceilings depending on the nature of the offence:

- Administrative fines of up to €30,000,000 or, if the offender is a company, up to 6% of its total worldwide annual turnover in the previous financial year for:

— non-compliance with the ban on artificial intelligence practices;

— non-compliance of the AI system with the requirements relating to data quality criteria.

- Failure of the AI system to comply with the requirements or obligations of the other provisions of the AI Act will be subject to an administrative fine of up to €20,000,000 or, if the offender is a company, up to 4% of its total worldwide annual turnover in the previous financial year.

- Providing incorrect, incomplete or misleading information to notified bodies and competent national authorities in response to a request is subject to an administrative fine of up to €10,000,000 or, if the offender is an undertaking, up to 2% of its total worldwide annual turnover in the preceding business year, whichever is the greater.